I have been successfully using consumer SSD in my ESXi for few years. After migration to Proxmox, I have tried to use ZFS for virtual machines. First few weeks I was very happy about it. Then I noticed very fast increasing SSD wearout levels on my new SSDs. Proxmox support forums and reddit are full of users mentioning proxmox is destroying SSD, but I thought this is just rumor. Where is the true?

Problem is with ZFS design. It is designed for your data be safe and way it works, it actually do write amplification. Quoting user “Dunuin”

According to my ZFS benchmarks with enterprise SSDs I’ve seen a write amplification between factor 3 (big async sequential writes) and factor 81 (4k random sync writes) with an average of factor 20 with my homeserver measured over months.

In my setup, I have just ZFS mirror, no raidz. I am running few virtual machines:

- zabbix, including mysql

- nextcloud (with another mysql instance)

- web & mail hosting

- samba fileserver

- Windows VM

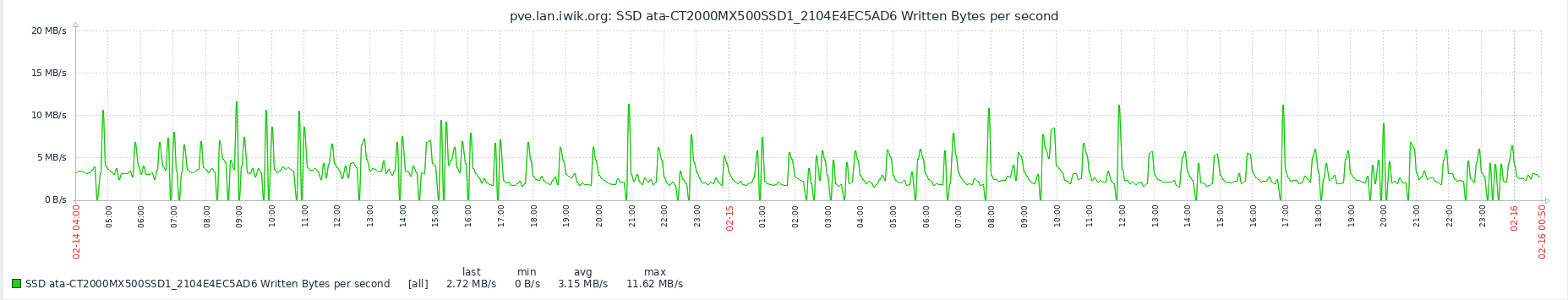

This VMs gives nearly 1MB/s writes in average. But real writes to SSD are about 3.7-3.8 times higher!!! due ZFS write amplification. In this situation consumer SSD will die very soon. This is graph of one SSD disk in ZFS mirror:

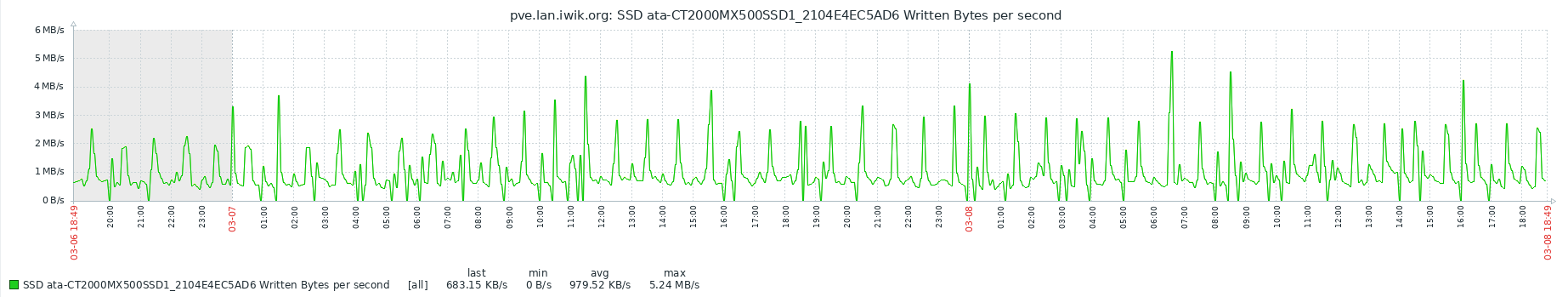

And after migration to LVM (@mdadm raid1) and you can see difference. VM workload is same:

Don’t use consumer SSD with ZFS for virtualization. You can kill you drives in less then year, depending on your workload. I think Proxmox team should put big warning in their wiki about this problem. Their wiki is nearly prefect, but is missing this key point about ZFS and (consumer) SSD wear problem. It is only mentioned in ZFS SSD benchmark faq:

To quote the PVE ZFS Benchmark paper FAQ page 8 again:

hello, can you tell me where you got the graphs for the hard disk statistics? I can not find them in my installation.

thank you and many greetings

Hi, this graphs comes from my zabbix, they are not included in pve. Zabbix also does not include this graphs by default, it is customized solution. You can check collectd disk plugin as alternative, https://collectd.org/wiki/index.php/Plugin:Disk I think it may be more easy to install and get working.

But it also makes sense to ask in proxmox forum to add feature request (per disk graphs), what do you think?

Hi, I have very different data on SSD disks.

I have some server system in 24/7 production.

The oldest, according to stat built on 28/05/2019, mounts 4x 500 GB of inexpensive Samsung Evo 850 and 4x 512 Samsung Pro 860 in a mixed 8x 500 GB RaidZ.

This machine virtualizes a PBX, an ERP with over 100 users, a mail system that received thousands of emails until our switch to 365 in mid 2021, a custom app for internal use.

The most weared are the 850, with a peak of 40% wearout for one disk, over 3.5 Years.

Another example : system built on 23/04/2021, 8x1TB RaidZ Samsung 870 Evo, NFS share of 7 TB with 30 production VMs daily used 8/5, most weared drive report 21% over 20 Months.

That is interesting, could you also check what was total bytes written? Then we can compare it.

Perhaps using qcow2 files instead of zvols would help?

hello~

Is it safe to use consumer SSDs to run ESXi?

THX